Why Do you Need Containers, Instead of “Virtual Machine Images” for your Applications?

This is one of the common question that arises in mind before making the switch to running your application on containers. This question is more relevant today because many companies are thinking to move to cloud or are already running their workloads in cloud. All major public cloud providers have a mechanism to create a “virtual machine image” with your applications bundled inside. For example, if you are in AWS, you can create an AMI(amazon machine image) and have your required applications and its dependencies packaged inside. You can even share your AMI to other users in the cloud, or sell it as a product in cloud for other interested customers. Hence the question.. Why on earth do you need a container? Why can’t i directly use these virtual machine images instead of containers?.

To answer that question, let’s start from some common fundamentals that we always ignore.

Nobody uses an operating system. Yes that’s right. Nobody ever uses an operating system for their day to day work. People use programs. Programs are the only thing that we care about. Right now, while writing this, i am not using an operating system, but a program called “text editor”. The bottom line is 99 percent of computer users never care about the operating system. They just care about programs.

Let me clarify what i said above. We never use an operating system directly. We never directly interact with operating system. We use programs, and programs use interfaces/APIs provided by the operating system to get the work done. The role of an operating system is just to help programs run.

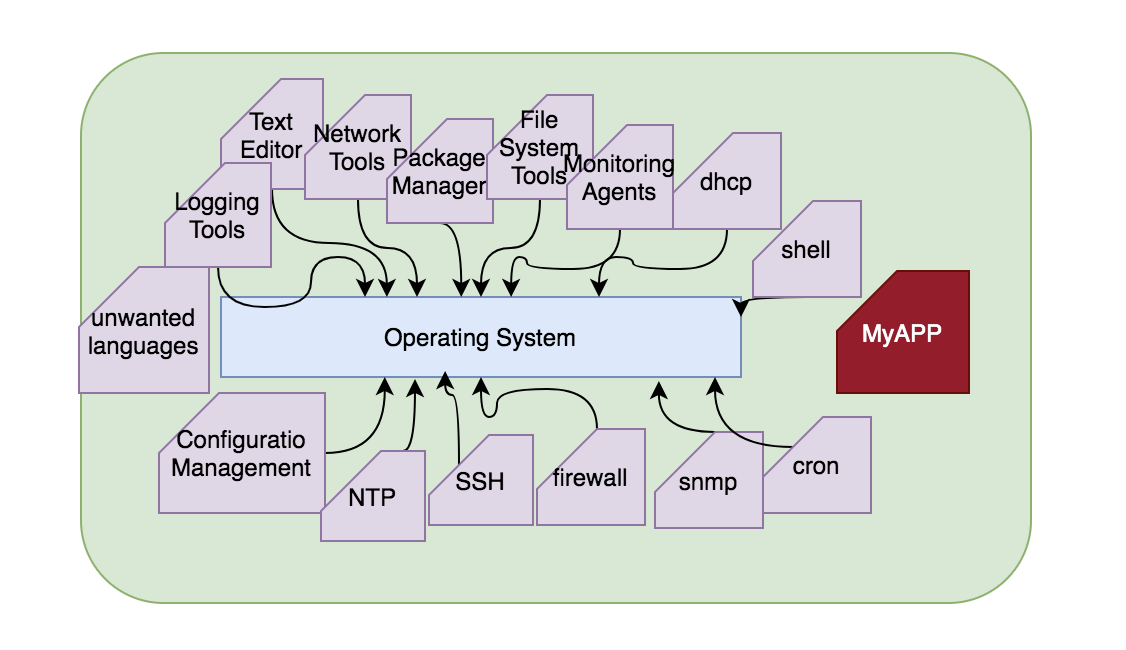

Companies only care about their applications, it should work as expected in a given environment.

So the question is, why ship an entire operating system (which is quite big) with only a tiny part dedicated to your application. You should ship only your application, and its dependencies, nothing more nothing less.

Typically when you create a virtual machine image, your image will contain many components that are by default included in the operating system distribution. Even though you care about the “red box”(ie: the app you care about) in the above shown image, you ship the entire thing with so many items around it. You should only ship the red box and any other items that the red box needs. Its simply foolish to build and maintain an entire operating system for running your application.

This is where containers steps in to solve the problem. Docker provides the required tooling to package your application with just the required items and ship it to anywhere. Instead of shipping an entire operating system, you now ship only the application and its required items.

Your company can now start building and sharing the application as docker images rather than vm images. Docker images are typically in megabytes (100mb or below.). Imagine shipping an application using a virtual machine image, it will easily run into Gigabyte size.

Let us approach the same problem from a different angle.

What do you need from an operating system for running your applications?

- You need storage space. Of course your application binary will use some storage space. It might also need some space to store its dependency libraries, and other run times etc. If this storage space is completely isolated from other applications its much better.

- You need network. Of course your application will provide its services to outside world using a network port. So you need network interface/IP/port

- PID number. Every process in a computer has a unique process id number. Your application will also be one process or a combination. The bottom line is you need a pid number.

- Resource Isolation. Nobody else should steal the resources that you need for your app. This means, even if there are other applications on the operating system, it should not eat up all the resources. Your application should also respect others and should not steal resources.

- segregated users and groups

- segregated hostname and domain name

If you have a method to achieve all of the above in a segregated, secure manner, you pretty much have everything to run your application. This is exactly what containerisation is all about. The docker software simply creates all the above mentioned requirements for your app to function properly. Remember what we discussed earlier? Programs are the only component that directly talks to an operating system. Hence the docker software(program) talks the operating system, and requests to create the above mentioned requirements to run your application.

Docker is nothing but a software with an API that helps in achieving this.

Linux Kernel Released two major features named Cgroup(control groups) & Namespaces. The idea behind CGroup is to create a control group for an application and then limit the resources for that application. So while creating a control group, you can specify the CPU and Memory requirement, and then the process running inside that control group cannot consume above that limit.

Pid numbers are unique in all operating system. Meaning, you cannot have a pid number of 10 twice for different processes at the same time on the same system. However, namespaces create a segregated space for creating PID numbers. Namespaces create sub trees beneath the main pid namespaces for different applications. So basically when we create a new namespace for processes, any new process started in that namespace will have the PID number of 1. Remember the fact that new namespaces for processes are created as trees beneath the main system namespace for processes. This means new namespaces cannot see pids above itself, but can see beneath.

Docker software creates segregated namespaces for resources, network, storage, pid, users & groups, hostname etc using the linux kernel features. This collective segregated space where your programs run are called containers. If you look at this from a traditional standpoint, you have all the benefits of virtualisation, but in a lean and light weight manner.

Remember, wherever you have the kernel features mentioned above, you can have your container running.

So, containers are extremely light weight as they do not have unwanted software and programs. It also does not have an operating system included. It just has your application with its dependencies and nothing else.

Docker provides an ecosystem and tooling for packaging your application as images. Images are nothing but the source code for creating a container. Image for “application A” will have its binaries, its libraries, it will take input parameters during startup, and it will also have the logic embedded to automatically start the application when you start the container using the image. So you pretty much have the gurantee that it will work if it worked in a developers laptop.

The security loopholes you have in a system, is directly proportional to the number of software/programs you have in the system. If you do not need a particular component for your application, it should not be there in the system. Attackers can use any door to enter the system. The less number of items you have in your system, the more secure you are. Containerised applications tend to stick to this model, because it won’t have any unwanted toolset that an OS by default comes with. This lean approach makes your application light weight and secure.

You can’t create a virtual machine in seconds. But you can create & destroy a container in seconds. This is because it does not boot an operating system. It directly runs your application in a segregated space. This speeds up time required for development, and operations.

Containers are Natively Immutable

As we discussed in the previous section, the kernel creates namespaces for storage, network, pid, users & groups etc while creating a container. In a Linux operating system “/” directory is the root of all other directories. All directories branch out of this root directory. Being said that, every application can have its own “/” directory. This basically means we are locking down a process to a particular location, and from that processes perspective, “/” directory is different.

This method is popularly called chroot.

Imagine if we make this chroot directory immutable. This is what docker containers are also known for. Basically the container image(the source code for creating the container) which contains the chroot directory with application binaries and dependencies are immutable. Once the image is built, its basically cannot be changed.

In fact you always create a new image rather than modifying an existing image. Even if an attacker gains access to your container, he wont easily be able to gain access to any other place in the system, because the process inside the container only knows about its segregated “/” and nothing else.

No responses yet